Why Model‑Based Development Needs Hand Code

Get the shift‑left benefits without losing engineering freedom

Model‑Based Development (MBD) promised that a control diagram could become an executable spec: press a button, simulate flight, and verify everything works as expected long before hardware shows up.

But even the best toolchains don't auto‑generate everything a real flight computer needs. Someone still has to write the low‑level plumbing—board support packages, RTOS wrappers, watchdogs, secure bootloaders—as well as the infrastructure for telemetry, logging, and high‑rate data links. These modules sit outside the control diagram’s core responsibilities, yet are critical to a safe, certifiable system. In short, the diagram gives you the control law; the rest of the stack still typically lives in hand‑written code.

Where the translation gap hits

When the design or modeling language (e.g., Simulink or Modelica) is different from the deployment language (C/C++, Rust) every change triggers a translation step and drags new pain along with it. Reviewers wade through thousands of regenerated lines to find a two‑line gain change; hot fixes applied in flight code never make it back to the model; and license‑bound exporters slow down CI pipelines that should be catching bugs in minutes. The semantic gap becomes a scheduling gap: more meetings, more “who owns this” emails, slower iteration.

You can see this gap most clearly when a high‑level model has to live inside a larger flight‑software stack.

A concrete example is Simulink → PX4 integration. MathWorks’ UAV Toolbox can emit a PX4‑compatible C module, but the generated code must be hand‑wrapped so it links against NuttX, registers a uORB publisher/subscriber set, and obeys the autopilot’s mixer and scheduler rules. A minor diagram tweak—for instance adding an integral anti‑windup clamp—forces regeneration of the entire C module, a re‑merge of the glue code, and a rebuild of the firmware image. The GNC team still owns the .slx file, while the software team owns the CMake targets and uORB bridges that tell PX4 how to schedule the task and route its messages; a hot‑fix on one side is easy to lose on the other. Each iteration therefore becomes half modeling task, half integration fire‑drill.

Less obvious but just as costly is the feedback loop from software tweaks back into control design. When a developer changes task priorities to ease CPU load, repacks sensor data into fixed‑point formats, or inserts extra safety wrappers around actuators, they also change the timing, resolution, or latency assumptions the GNC team used when they tuned the controller. A few hundred microseconds of added jitter, a truncated 16‑bit quaternion, or a bounds‑checking guard that clips an output can push a carefully‑designed loop toward saturation or instability. The control engineer must reopen the model, adjust gains or filters, and regenerate code—only to risk another merge when the software team refactors again. These hidden back‑and‑forth cycles stretch schedules and erode the very shift‑left advantage MBD promised.

In this workflow, the software team never gets the benefit of the model, and the GNC team is the only one simulating the actual control system with realistic conditions. A Simulink model often contains the only high-fidelity representation of the vehicle dynamics—rigid-body motion, aerodynamic loads, wind gusts, sensor latencies—and that model doesn't ship with the generated C code. So while both teams might perform Software-in-the-Loop tests, the software team's version often runs with static or placeholder inputs, not dynamic ones derived from a physics-based plant. This limits the ability to test control stability, failure responses, or sensitivities early in the cycle. Without shared access to a dynamics model or unified simulation environment, integration becomes less about behavior and more about plumbing: Does it compile? Does it publish the right data? Meanwhile, control-loop issues—saturation, instability, poor response—might be discovered late in HIL or flight test, rather than during shared bench-level simulation.

Unified‑language workflows

Many teams bypass the translator entirely. Open source frameworks like Basilisk or PX4 treat plain C++ as both the simulation language and the flight language. One function, one source file, everywhere. It kills the semantic gap but expects every controls engineer to become at least conversational in embedded development—a reasonable trade for some teams, a non‑starter for others. The hard truth is most controls experts should not be touching production grade embedded code.

Pictorus: Graphics on top, Always Rust underneath

Pictorus offers a hybrid approach. You still drag blocks on a canvas, but each block is real Rust code that you can open, review, and test with the same tools the software team already uses. The only artifact the tool generates is a tiny scheduler manifest that says “call component A, then B, then C.” No mass of auto‑written C, no opaque glue files.

Why that matters:

- What you simulate is what you fly. The Rust you inspect in the browser is the Rust that ends up on the flight computer.

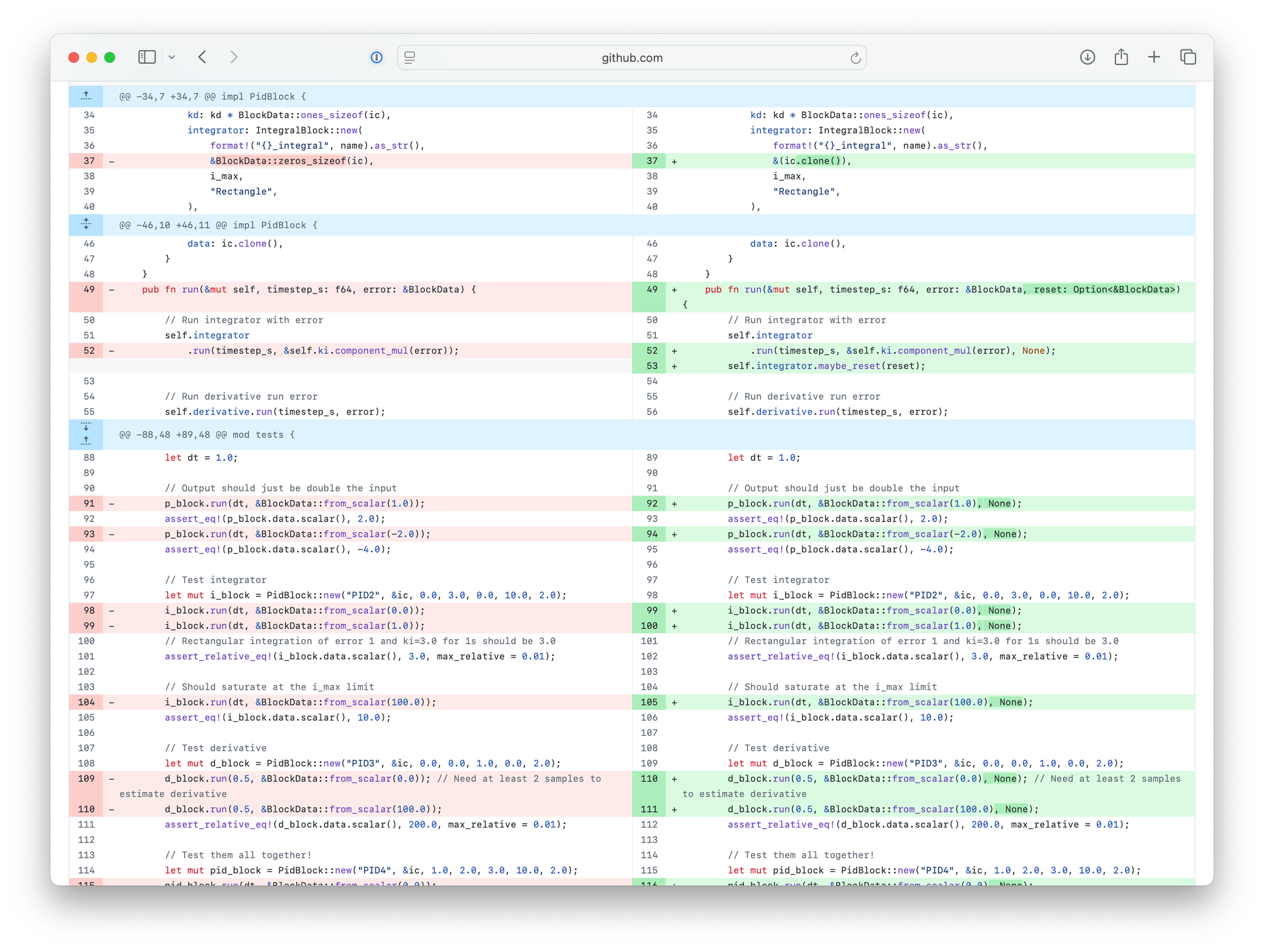

- Readable diffs. A one‑line tweak shows up as exactly one line in Git—not a 4 000‑line file churn.

- Debugger‑friendly. Stack traces point at your code

- Audit‑friendly. Reviewers read hand‑written logic and a single file scheduler, not megabytes of boilerplate.

- Gentle on newcomers. Controls engineers can live in the canvas today and learn Rust block‑by‑block tomorrow.

Because every environment—simulation, bench, and flight—runs the exact same Rust code, Pictorus eliminates the usual two‑repo divide. The GNC team can check in a new guidance component, and the software team can regression test it in the same pull request, on the same CI runner, against the same hardware abstraction layer. No more exporting a model, zip files, or asking “which version are we flying?” All artifacts—models, source, test vectors, binaries—sit in one repository and compile with one command.

Why Rust feels like a modeling language— not just a coding language

Rust works in this workflow because it behaves more like an executable specification than a traditional low-level language. When a control algorithm is modeled in Pictorus, the Rust code reads cleanly and closely reflects the structure and logic of the model. Every variable has a clear owner, and every message follows an explicit path. Crucially, the Rust language enforces these structures—not just at runtime, but at compile time—preserving the designer’s intent all the way to silicon.

For an MBD team that matters in three ways.

- Early guard‑rails. Runtime crash‑classes—null derefs, race conditions, use‑after‑free—are blocked at compile time. That pushes whole categories of bugs from late‑stage HIL back to “does it build?” The result is the shift‑left the V‑model has always promised.

- Readable by both camps. A controls engineer can glance at a Rust block and still recognize the equations they dragged onto the canvas; a software engineer can run cargo test and drop a breakpoint in familiar source code. Everyone talks about the same lines, not about “the model vs the code.”

- Traceable without spreadsheets. When you run

cargo build --release, Cargo captures every input that went into that binary—compiler version, linker flags, crate checksums, even the output of build scripts—in a lock‑file and hash‑stamped metadata. Re‑building days or months later with the same lock‑file produces a bit‑for‑bit identical executable. With plain C++ and gcc you get Makefiles or CMake trees that reference system headers, global compiler versions, and script flags. Cargo gives you that determinism out of the box; no extra tooling, no spreadsheets.

In short, Rust lets the model and the hand-written modules share one codebase—no large translator, no semantic drift. Could this also be done in C++? Yes—but Rust’s stricter guarantees, modern tooling, and safety-by-default approach make it a better fit for teams that want to shift left and simplify integration. It offers the same unification promise, but with more built-in confidence.

One repository, one truth

When the model, the hand code, and the tests all live in a single Rust repository, every engineer can run the exact same simulation and build the exact same binary. A controls change triggers cargo test and a Software‑in‑the‑Loop run in CI; a software tweak gets simulated in Model‑in‑the‑Loop before it ever touches hardware. One commit hash captures everything DO‑178C Table A‑5 asks for. Compare that to the classic hand‑off: model in one repo, generated C in another, trace matrices to keep them in sync, and a lingering question of which artifact counts as the truth.

Bottom line: MBD accelerates design, but hand‑written code is where theory meets silicon. Writing that code in the same language you model in—and letting Rust prove the implementation properties—keeps the shift‑left promise without drowning in generated C. Model early, test continuously, fly sooner.